Apache Server: KeepAlive, good practice

The KeepAlive parameter is more important than you might actually think, we learnt the hard way. Many good articles have discussed the KeepAlive topic (e.g. KeepAlive On/Off) with good arguments. Here I’ll present rather a summary.

I personally set up our apache server for development machine in EC2/VPC (Amazon Web Services). I set up the KeepAlive parameter to On because we were concerned with speed more than with memory. The server was running fine with sometimes Apache taking spikes in memory usage. The problem started when our blog began to increase traffic and suddenly it was practically impossible to connect to the server, it was running low in memory with Apache taking up to 1GB in memory! And as usual in the worst moment! The first time, a graceful restart of Apache fixed the problem but it was just getting worse.

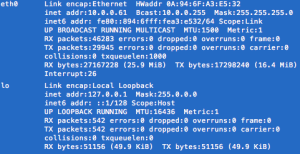

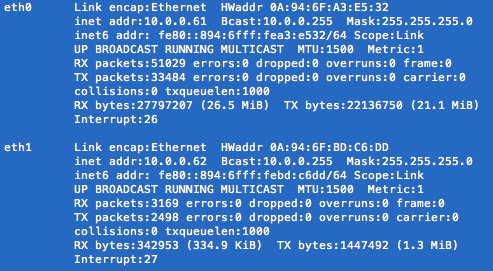

How much traffic did it take? Not that much, a simple Apache Benchmark test with 20 concurrent connections and 50 queries was enough to render the server unusable.

ab -c 20 -n 50 http://our-server/

Just imagine several (smart) spiders hitting your server, that would be it.

Then, I had to set the KeepAlive value to Off, the requests take a bit longer but our server now can take much more. You can fine tune the worker values too.

sudo vi /etc/httpd/conf/httpd.conf; # in CentOS and set: KeepAlive Off

Conclusion, if your going to expose your server to a broad audience set KeepAlive to Off. However, if you rather control the amount of traffic and the authorised host/machines that connect to your server, then you might set KeepAlive to On.

As a final advise, block common attacks to your server. I personally use the script that is also used in phpmyadmin. See below, you can add it to your VirtualHost configuration or to an .htaccess file.

# Allow only GET and POST verbs

RewriteCond %{REQUEST_METHOD} !^(GET|POST)$ [NC,OR]

# Ban Typical Vulnerability Scanners and others

# Kick out Script Kiddies

RewriteCond %{HTTP_USER_AGENT} ^(java|curl|wget).* [NC,OR]

RewriteCond %{HTTP_USER_AGENT} ^.*(libwww-perl|curl|wget|python|nikto|wkito|pikto|scan|acunetix).* [NC,OR]

RewriteCond %{HTTP_USER_AGENT} ^.*(winhttp|HTTrack|clshttp|archiver|loader|email|harvest|extract|grab|miner).* [NC]

RewriteRule .* - [F]

Hope it was useful and do not forget to optimize your server!