We are running our Virtual Private Cloud (VPC) using Amazon Web Services (AWS). In this particular case we wanted to add an extra network interface to one of our servers which is an Amazon AMI (CentOS). This should be an easy task that should take 10 minutes but you need to be careful, otherwise you’d spent much more time.

The first step is to attach an Elastic Network Interface (ENI) to your instance. This can be accomplished using the Amazon console, first, creating a new interface (if you don’t have one available) and then attach it either when launching a new instance or when your instance is stopped.

Next, attach an Elastic IP Address to your interface (if you need to). Up to this point your instance should have two network interfaces, each with an elastic IP.

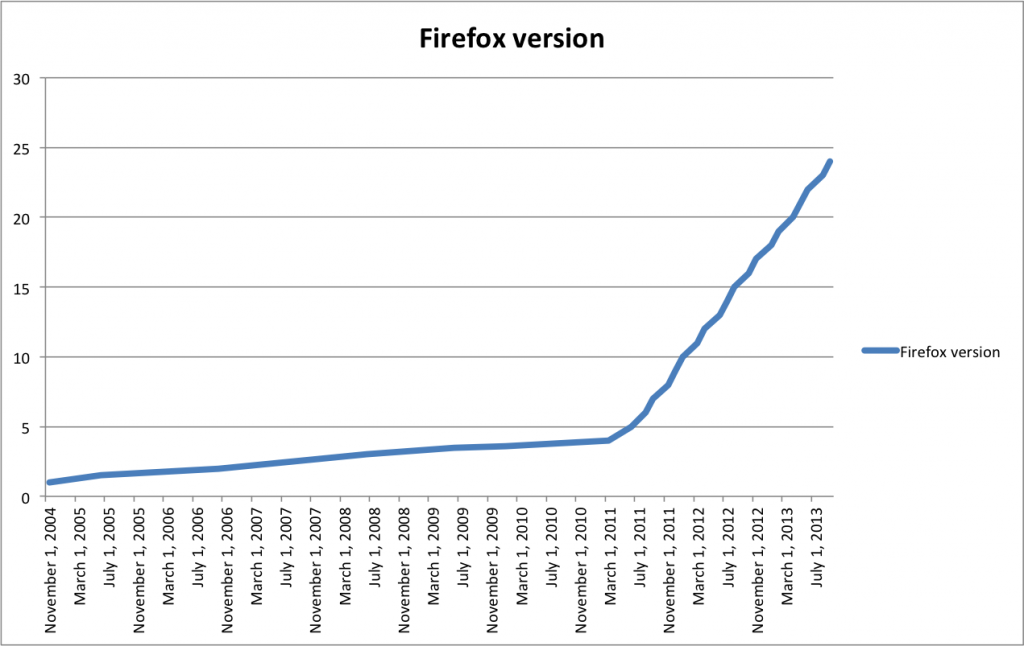

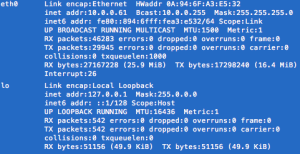

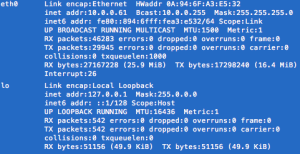

Connect to your VPC instance as usual and run a ifconfig command in your shell to see the list of interfaces.

ifconfig

What you see next is the default interface (eth0) and the loopback one. then you need to create an interface manually (e.g. eth1) which is rather straightforward.

Edit: There is no need to create manually an interface configuration, Amazon provides the ec2-net-utils to do that task for you. Issue the command below to configure and bring up your new interface.

sudo ec2ifup eth1

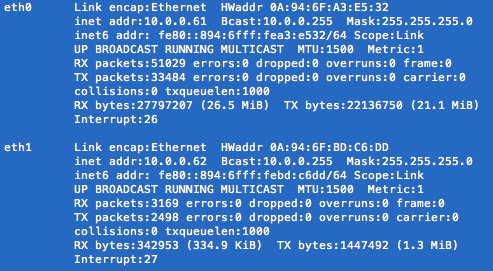

ifconfig

You shall see your new interface listed as eth1 as in the image below (or eth2 as it might be the case) and it’s now reachable from other hosts in the same subnetwork (or the internet if using an elastic IP attached to it).

If you do a mistake you can bring the interface down with the command counterpart:

sudo ec2ifdown eth1

.

Run the commands below to add a network interface to your instance.

cd /etc/sysconfig/network-scripts

sudo cp ifcfg-eth0 ifcfg-eth1

sudo vi ifcfg-eth1

The *only* parameter you need to change is the device

DEVICE=eth1

(i.e. setting the name). Save the changes and quit the editor.

Finally, it only remains to restart the network:

sudo /etc/init.d/network restart

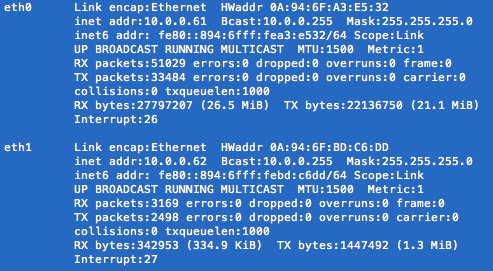

If you run again

ifconfig

, you should now see your new interface in the list.

For a longer explanation on how to setup an interface in your instance, have a look at this tutorial.

That’s it, you should now have both of your interfaces working but for security, check if you can connect/reach both interfaces (e.g eth0 and eth1 in the example). If you ever find a problem such as only one interface being reachable/functional then go to your Amazon console Detach and Reattach the new interface (ENI) and run the ec2ifup command, check also your firewall settings.

For a longer explanation of ENIs and the ec2-net-utils you can refer to the EC2 user guide.

Thanks for reading, hope it helps somebody